Kafka Fundamental Concepts

What is Kafka?

Apache Kafka is an event streaming platform used to collect, process, store, and integrate data at scale. It has numerous use cases including distributed logging, stream processing, data integration, and pub/sub messaging.

Kafka Architecture - Fundamental Concepts

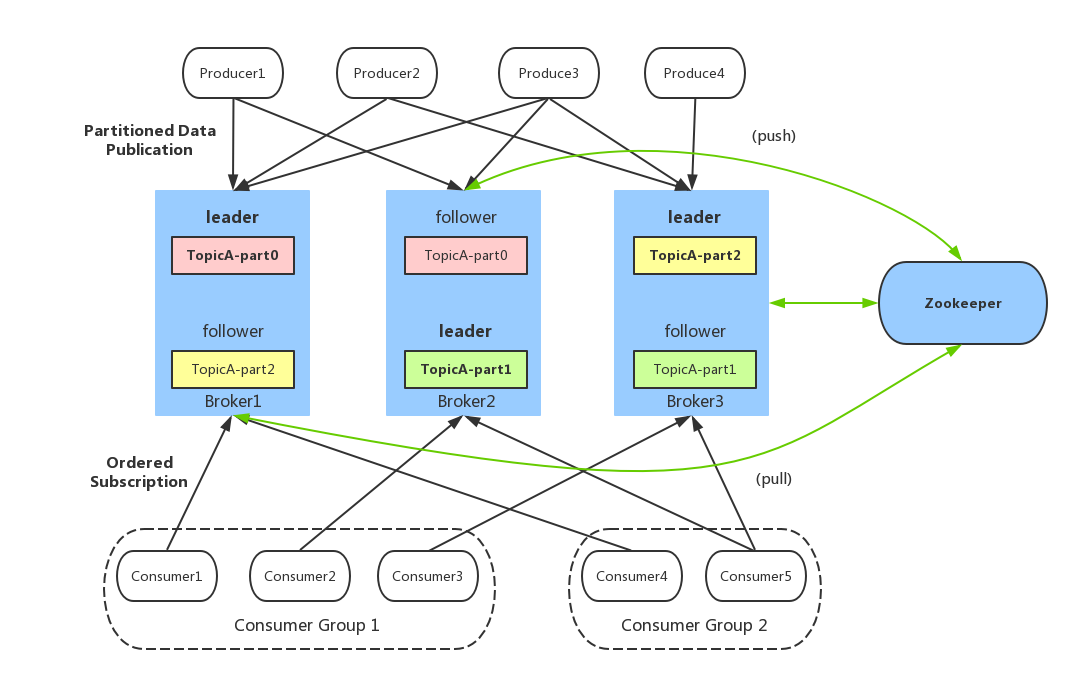

- Topic: Use different topics to hold different kinds of events.Here we have only one topic named TopicA.

- Partitioning / Topic-Partition: Partitioning takes the single topic log and breaks it into multiple logs, each of which can live on a separate node in the Kafka cluster. This way, the work of storing messages, writing new messages, and processing existing messages can be split among many nodes in the cluster.

- Broker: Kafka is composed of a network of machines called brokers.

- Producer and Consumer: These are client applications that contain your code, putting messages into topics and reading messages from topics.

- Zookeeper: A external service to store metadata of Kafka.

- Consumer Group: We can put consumers in a group to avoid that different consumers get same message.

- Replication, leader and follower: We need to copy partition data to several other brokers to keep it safe. Those copies are called follower replicas, whereas the main partition is called the leader replica. When you produce data to the leader—in general, reading and writing are done to the leader—the leader and the followers work together to replicate those new writes to the followers.

More about leader and follower

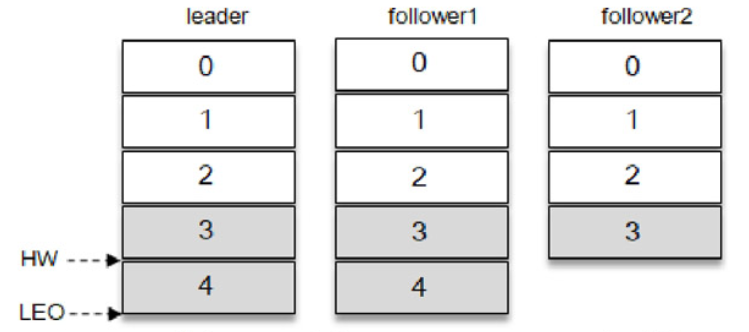

Let’s look into only one partition.

All replicas, including leader and followers, are called AR (Assigned Replicas). After leader received some messages, followers will pull these messages from leader, usually followers can sync up the messages quickly, these replicas are called ISR (In-Sync Replicas). If the replica lag too much, it’s going to be OSR (Out-of-Sync Replicas). Definitely, the number of AR equal to ISR + OSR.

In each partition, Kafka use offset to locate messages, just like the index of array.

LEO is short for Log End Offset, it means the next offset to append new message. In the picture, the leader has received 5 messages, so the LEO is 5. The LEO of follower1 is 5 as well. However, the follower2 haven’t caught up the latest message, so its LEO is 4.

In this case, the consumers can only get messages from 0th to 3rd for the time being. Kafka only allow consumers get messages which have been saved by all ISRs. In order to indicate the range of messages which can be consumed, Kafka introduced HW( High Watermark).It should be 4 here, like the LEO using the next offset of latest message.

To show the details of partitions, you can use the command below.

1 | $KAFKA_HOME/bin/kafka-topics.sh --describe --topic test --bootstrap-server <your brokers> |